Fundamentals of Arbitrary Waveform Generation Ch. 2

Note: On the previous server, someone left a comment that DSP’s work quite differently. Unfortunately, this comment got lost during the migration. Please feel free to contact me if you spot any errors.

You can think of generating a sampled signal as generating a train of -pulses, ideally filtered by a "brick wall" filter with a cut-off frequency. This brick-wall filter is a convolution with a sinc-function in the time-domain.

In reality, you don't do -pulses, but 0-order hold (-)pulses. This, in itself, is a crude low-pass-filter. You can show it has 3.92 dB attenuation at . You have to correct for this "filter" by a subsequent (analog) interpolation filter.

The interpolation filter can't be made to roll off sharply at , so aliasing-free AWG only works up to approx. .

You can further improve the output by pre-distorting the DAC-input.

The publication is a bit weak on nomenclature when describing the different sampling frequencies involved in AWG's. So I'll define three quantities: The sampling frequency of the waveform, , the sampling frequency of the DAC, , and the access rate of the memory, . All these might be quite different from each other. It's probably best to think of as variable (for sweeps and such).

There are different AWG architectures.

- True Arb: Variable , so that every sample is played, no matter what is used.

- Direct Digital Synthesis (DDS): Fixed + "phase accumulator", which tells you which sample to play.

- Interpolating DAC: is higher than the access rate of the memory, with DSP filling in the blanks. Max. freq. is still limitied by , but images are pushed up to . This makes filtering them easier. Further DAC noise is spread over a larger spectrum.

- Pseudo Interleaving DAC: Two True-Arb's running with shift that are added. This is similiar to IQ, no? Gives full access up to (almost) , but is sensitive to timing errors.

- Trueform: Looks to me like interpolating DAC, but and are not necessarily so different. Thus, the DSP specializes in filling in the blanks for . Is this marketing BS?

DDS vs. True Arb: DDS needs the time resolution of the waveform to be as good as possible. If features are shorter than , they might be skipped. A digital low-pass filter up front helps. A DDS can have , but this makes sweeping impossible.

Details about Trueform: ILt's a kind of DDS architecture where the waveform is interpolated between samples (upsampling -> FIR LP filter -> Decimation). The digital filter can be optimized (user-set) for flat frequency response or precise timing (no ringing.).

A typical vertical resolution is 10-14 bits.

The limited vertical resolution leads to quantization noise. It's bound to less than 1/2 LSB and often has a flat spectrum. But in reality, it's periodic, and if you are unlucky the period is low and you get noise peaks. Quantization noise goes down with 6dB per additional bit. The noise power density goes down with higher samplig rate (because the quantization noise amplitude is roughly constant over time).

You always have thermal noise, with a power density of -174dBm/Hz @ room temperature.

At a high enough sampling rate, quantization noise will be lower than thermal noise floor. For 12 bit, this is 12 GSa/s, for 14 bit it's 0.8 GSa/s (assuming a 0.7 Vpp, full scale signal).

Linear DAC errors are trivially corrected for. Nonlinearities lead to harmonics and intermodulation, which are all folded into the band. Dynamic DAC errors are the worst. Among these are switching glitches (different timing between different bits + overshoots). The so-called "major carry transition" from 0111111111 to 1000000000 is especially bad, even though it's only a change by LSB.

Another DAC error comes from the limited slew rate (rate of change for output; this is also limited by the output amp's BW).

Slew-rate + switching glitches lead to non-zero settling time. (There is also a fixed delay time, but this does not matter much and is subsequently often not included in the spec of the settling time.)

If you want to minimize non-linearities, it might make sense to use an amplitutde smaller than FS.

SFDR stands for spurious free dynamic range. It's usually given in dBc, sometimes only including non-harmonic spurs. SFDR dBFS is basically the same thing, but given in dBFS.

Non-harmonic spurs are caused by bleedthrough of internal oscillators (switch-mode PS; or, sometimes, by quantization noise.

You also have white-noise sources everywhere, at the very minimum the thermal noise.

Sampling-clock jitter leads to phase noise of the carrier. Other sources of phase noise include DAC sampling uncertainty, data-signal jitter, current source skew. (Even after finishing the publication, I am not sure what these last three are.)

Don't trust the SFDR specs. These are not necessarily tested for all possible carrier frequencies.

THD is total harmonic distortion. This is the r.m.s. amplitude of the first harmonics ( should be stated in the spec) divided by the signal amplitude. Usually given in %, sometimes in dBc. (Then, of course, it's the power ratio.)

SNR: Signal power/noise (floor) power with noise power integrated from 0 to , excluding spurs.

SINAD: Signal power/(noise+distortion) power.

ENOB: Effective number of bits; i.e. as many bits that quantization noise power would be as big as noise+distortion power.

Old DAC's (like, Lord Kelvin old) were just a string of resistance and lot's of switches - a string DAC.

The implementation of modern DACs is based on lots of current sources. Each current source is just a transitor biased with a constant at the gate. This causes a constant current to flow through a resistor towards a (negative) . The "upper rail" is switched with two transistors (one using signal, the other using not(signal)) between either GND or to the "DAC network". This DAC network is often just the negative input of a single OP-Amp. This OP-Amp is operated in feedback mode and tries to keep the input at GND. The feedback path is across a resistor and carries the combined current of all the current sources, such that the output is proportional to the current. (I guess a diagram would help me here, future me. Look at page 60 of the PDF.) Bottom line: The current sources pull a varying amount of current through a single resistor. The voltage across this resistor is the output.

There are two approaches for an -bit DAC: You can either have identical current sources (less nonlinearities), or weighted current sources (less complexity).

In real life, the two architectures are often mixed, using a "unit element section" (sometimes called "thermometer section") for the most significant bits (to reduce non-linearity where it matters) and a "binary weighted section" is used for the least significant bits. These are called "hybrid" or "segmented DACs".

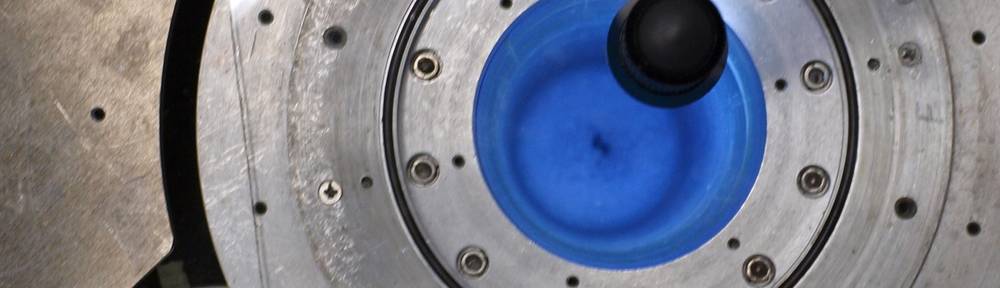

Deglitching the signal can be done by "resampling" it. You put the DAC-ouput into a track-and-hold section. This track-and-hold-section samples the signal (again, at the same rate as the DAC) just after the glitches have died down, and then holds the signal. It's important that this section is linear. You can do something similiar with all the current sources - Agilent loves this. It's not explained very well (picture is blurry.)

Some of the advanced deglitching techniques can cause the signal to die down (Return to Zero) while the DAC is glitching. This can distort the signal, so some ARBs allow you to turn this feature off.

Another technique that helps with deglitching is dual DAC cores, offset by half a cycle. This allows distributed deglitching without the Return to Zero problem. Alternatively, you live with the Return to Zero, which reduces amplitude but improves image rejection in the other Nyquist band half. Finally, you can run the two cores indpendently and thereby generate frequencies through the entire Nyquist band . (Similiar to IQ stuff.)

After the DAC, there can be several signal paths. If the user chooses "Direct DAC" output, no further processing happens. Often, the output filters and amplifiers include a "Time domain optimisation" (no ringing, low jitter), and a "Frequency domain optimization" (high dynamic range, flatness, good image removal". For our applications, I think we want the time domain optimization.

Typical output filters are 4th-order Bessel filters. They have worse roll-off than Butterworth and Chebyshev, but better step response (no ringing).

For time optimized output, Agilent recommends to choose the filter such that the rise-time is adequate, and then set sample rate so high that images are moved into the "well-enough" attenuation band of that filter. With Bessel filter, you typically need at least 4x oversampling (instead of 2x stated by Nyquist).

Frequecy optimized signals need a filter that cancels the frequency-response of the "0-order-hold" of the DAC. These types of filters are called equalizing filters. You can also do this, to some extent, with the digital data before it is sent to the DAC, either through precomputation, or, in the case of our 33500 and 33600 (?), with the built-in DSP block "on the fly".